The movement of the coordinates making up the line segments are based on parametric equations. Implemented in Processing, and turned into a GIF using ImageMagick.

My design work sits at the intersection of technology, interaction, and creative expression. I draw inspiration from music, 3D graphics, human-computer interaction, wearables, computer vision, procedural and generative systems, virtual environments, and physics simulations — not just as tools, but as ways of thinking about form, behavior, and experience. Much of my practice involves building custom interactive systems using TouchDesigner, Max, Unreal Engine, Unity, Autodesk Fusion, and other media development environments and programming frameworks, often in combination with embedded electronics and sensor-driven interfaces.

I am captivated by how computational systems can create responsive, immersive, and deeply embodied experiences. Whether working with real-time simulation, sensor networks, spatial audio, or generative structures, I approach each project as an opportunity to learn and explore how humans engage with dynamic environments, and how technology can expand or transform that engagement. This perspective informs much of my recent work across installation art, instrument design, and interactive media.

Several of these explorations — including prototypes, custom tools, research studies, and ongoing development work — are documented openly. If you're interested in the technical process behind my projects, you can find source code and other materials on my GitHub.

Echo Nexus is an interactive installation exploring human-computer interaction to create a novel sensory experience through collective interplay, resulting in an immersive audiovisual environment that reacts to presence, motion, and touch. The project was developed in collaboration with technologist, sound designer, and composer Álvaro Morales, and abstract painter, sculptor, and educator Anna Schwartz. Echo Nexus was featured in the 2025 Sound Scene Festival, and was showcased at the Smithsonian's Hirshhorn Museum and Sculpture Garden, Washington, D.C. Special thanks to Elif Kavuşturan for her support and assistance during the installation and the development process.

Echo Nexus emerged from years of research in interactive instrument design, physical computing, procedural audio, embedded systems, 3D graphics, networking, fabrication, sculpture, and visual arts. It represents an ongoing investigation into how human gestures, computational systems, and perceptual feedback co-evolve to create new modes of artistic interaction.

The installation consists of a series of custom-designed, 3D-printed icosahedral Cells. Each Cell integrates embedded audio processing, inertial sensing, and networked communication, allowing it to react to participant interactions by procedurally generating sound and graphics. The motion data captured by the inertial sensor is processed in an embedded system to calculate the magnitude of acceleration and orientation of the icosahedron, attributes that carry meaningful descriptors of how participants engage with the Cell. When a participant moves a Cell, it will begin to play a procedurally generated rain-like soundscape based on these motion descriptors.

These descriptors are also wirelessly sent to a computer as OSC messages using UDP over WiFi. Using Max and Jitter, the computer will draw colorful graphics in the form of trails within a real-time fluid simulation based on curl noise. As more Cells are activated, each one will contribute its own trail to the simulation, each trail distinguished by a unique color. The collective creation of trails and soundscapes emphasize the cellular role of each participant, allowing for their physical gestures to directly sculpt procedural imagery in an organic and emergent way.

The 3D models for the Cells were created in Autodesk Fusion, optimizing for cable routing and modular assembly, and fabricated using high-resolution PLA 3D printing. After printing, each Cell was sanded and coated with interference paints, which shift color depending on the viewing angle and lighting conditions. PLA's natural micro-ridge texture, which is typically considered a cosmetic flaw, proved to be ideal for this finish, giving the interference pigments more surface variation to refract from and resulting in a shimmering, iridescent appearance as they moved.

This is the list of materials used to create Echo Nexus:

Hardware

Software

Adaptive Art Technologies is an initiative that aims to develop accessible devices that bring novel approaches and opportunities to creative expression, particularly to people with disabilities.

Currently, the main initiative of Adaptive Art Technologies is the development of an iOS application entitled AAT Synth. The design is based on a modular approach that keep the media engines separate from the input controllers. This facilitates multiple approaches for creative expression. The main idea is to craft intuitive and accessible mechanisms to interact with the system, opening the doors for everyone to create a meaningful musical experience. The project implements an audio synthesis engine, a motion controller that relies on the built-in sensors of the iOS device, and a computer vision controller that relies on feature detection through the device's camera. Future efforts aim to expand this project by including a video synthesis engine.

The app's icon was designed in collaboration with Elif Kavuşturan.

This video is the final presentation of my capstone project (Dec. 2023). It includes a description of the project as well as a demo of the synth.

The project is available as a TestFlight implementation that is being used for quality assurance and customer validation. If you're interested in trying out the this AAT prototype, you may reach me at david@sound-architect.com.

A couple of instrument prototypes have been developed over the years that focus on working with alternative controllers to control an audio synthesizer engine. One of these instruments, the STCV-Synth, controls the synth engine via a wearable devices and computer vision algorithms. The second one of these instruments, Neuromusic, controls the synth engine using an EEG headset.

The STCV-Synth consists of an audio synthesizer that can be controlled using the SensorTile by STMicroelectronics, a wearable device that contains various sensors, including an inertial measurement unit (IMU) equipped with an accelerometer, a gyroscope, and a magnetometer. The SensorTile can transmit sensor data via Bluetooth Low Energy (BLE), making it an efficient and powerful wireless device.

The second controller is based on computer vision via OpenCV and Google's MediaPipe library. Features are detected from a camera data stream, and the coordinates of where these features in the screen are obtained are analyzed for collision against GUI elements. This collision detection approach allows physical gestures to serve as a remote control for synthesizer settings.

The following videos demonstrate some of these prototypes in action, as well as a rundown of the available settings and controls.

Neuromusic utilizes a commercial-level Muse EEG headset to capture neural data. Using a combination of FFTs and AI, this data is processed into variable data streams, each corresponding to specific neural bands in independent frequency ranges. These bands are assigned to oscillators and processor parameters for synthesizer control and music generation.

This project is being developed with Neuroscientist and data scientist Benyamin Meschede-Krasa. Media demonstrations of our experiments will be added to this section in the coming weeks.

The modular approach in the design process aims to create a unified interface to connect a comprehensive set of alternative controllers to drive synthesizer engines. This process will begin by solidifying robust implementations of the wearable device and computer vision controllers, as well as improving the quality and resolution of the EEG controller. In terms of the generators, the audio engine will continue to be refined and optimized. Additionally, a video synthesizer will be developed using graphics frameworks, starting with OpenGL and slowly transitioning to Metal and Vulkan.

Developed in 2023, DisOrgan is an interactive installation that celebrates the impact of disability in shaping the landscape of innovation. Many everyday objects have their origins in disability, given that designers and inventors are constantly seeking solutions to support and enhance the lives of people they love, not to mention how communities of people with disabilities are at the forefront of innovative solutions that make the world more inclusive.

In collaboration with a team of talented artists, activists, and entrepreneurs, we featured this installation as part of the Sound Scene Festival, presented at the Smithsonian Hirshhorn Museum and Sculpture Garden. In particular, I contributed to this project as an installation designer and composer.

The DisOrgan installation consisted of two interactive musical instruments, the Cart and the Pipes. The code for these instruments can be found on GitHub.

The Cart is a self contained musical instrument that relies on six infrared proximity sensors to control a Max patch. This patch deployed the composition, which was based upon random playback of samples of everyday objects that have their root in disability.

The Pipes are a collection of simple cylindrical elements that play a single musical note. Each cylinder has a single ultrasonic proximity sensor that controls the loudness of its corresponding note. The cylinders are intended to be played by multiple people, revealing a hidden chord and implying that we can only hear the music collectively. The sound generation is achieved using a Daisy Pod, manufactured by Electro-Smith.

The pipes were developed in collaboration with artist and technologist Alexander Wu.

MusiCodex is an ongoing instructional project. My vision is for it to eventually turn into a resource that musicians and students can use to better understand the inner workings and technologies of music production. The project implements various instructional design approaches to offer hands on experiences, as well as literature to ensure a practical and theoretical understanding.

The practical examples were designed using Max, by Cycling '74. I chose Max as the development platform as it permits any user to interact with the scripts without needing to purchase a license. Additionally, licensed users have the capacity to edit and repurpose the designed scripts.

The modules in markdown format and the Max patches may be found on the MusiCodex GitHub Repo

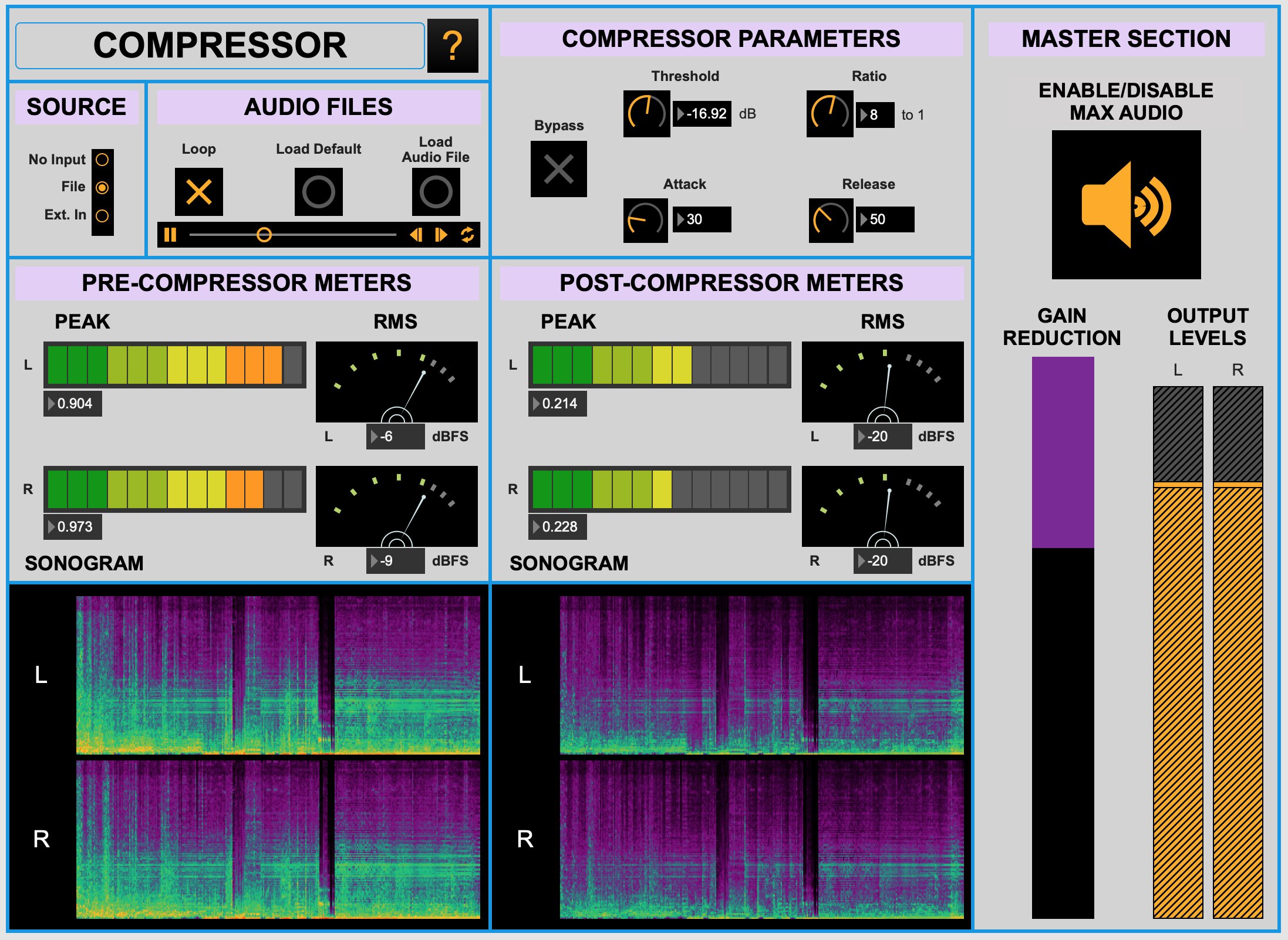

The audio compression module explains how dynamics processors work, as well as an overview of loudness as it relates to analog technologies. The Max compressor demonstrates signal interactions using a peak meter, an RMS meter, and a spectrogram.

Coded in C++ using OpenGL and SDL2, the 3D Sine Wave Visualizer offers an opportunity to interact with 3D sine waves via an event handler, which allows:

3D Sine Line Render - Side to Side

3D Sine Fill Render - Side to Side

3D Sine Points Render - Center Out

Incorporation of analog audio synthesizers (Moog Subsequent37 and Eurorack Synth), digital graphics (Processing 3), and Arduino boards and controllers. Cycling '74 Max 8 is used as the routing brain, and interfacing with the Eurorack Synth is achieved using the Monome Crow module, which converts data sent by Max 8 via USB to Control Voltage (CV) signals. The Arduino controllers modify various aspects of the musical and visual performance, including tempo, waveshapes and octave, position of the rendered ellipse, and colors. They do this by sending serial data to various locations via different ports, including Max, Processing, and the Moog Subsequent37 synthesizer.

GitHub RepositoryThese are sketches in visual composition relying on image processing, procedural geometry, and shaders. using TouchDesigner.

Displaced Colors is a series of illustrations exploring multidirectional displacement. The scripts are designed to have four ellipses spread in different directions based on permutations of the given displacement values. The script will stop and save an image once all four ellipses converge in the center.

Additional variations outline basic geometric shapes, based on explorations of geometric outlines and programmatic generative approaches.

Developed with Processing.